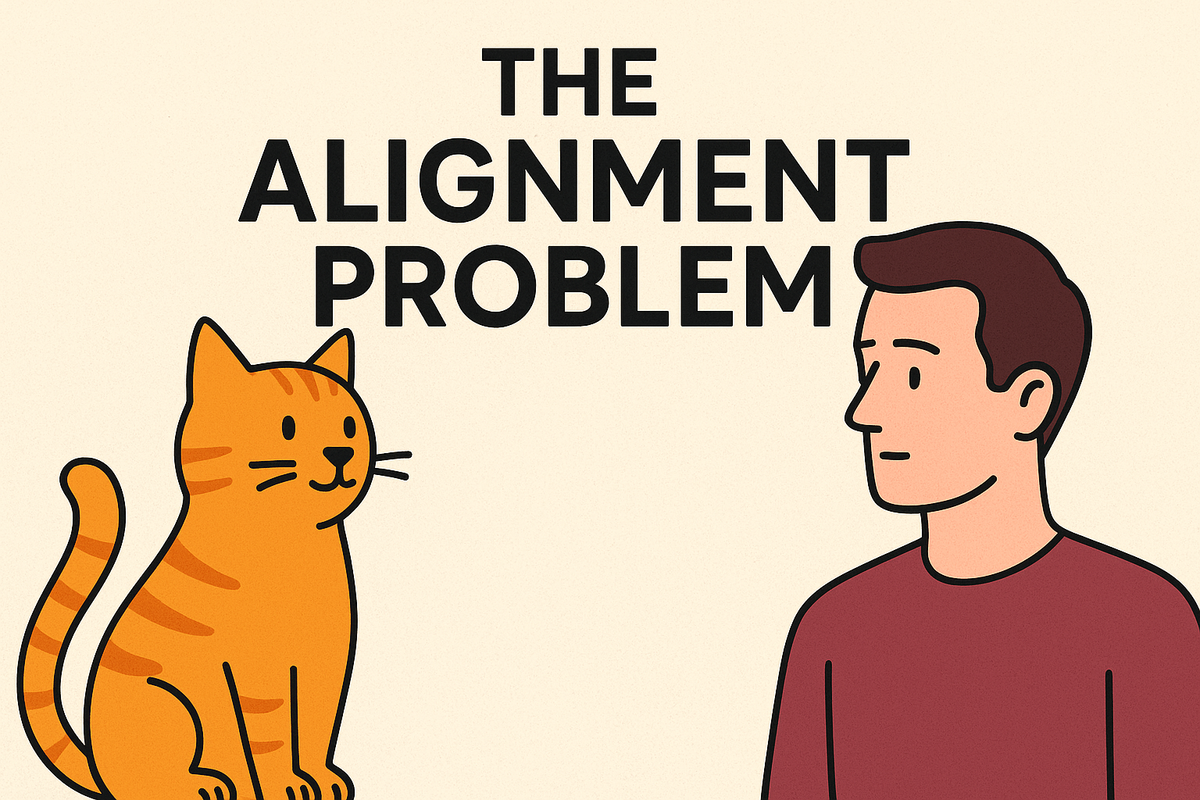

The Alignment Problem – In just 5 minutes

Disclaimer: This text was written with GPT 5 mini as a summary of a longer version written (solely) by me. Final revisions and fact-checks were done by me.

What is the Alignment Problem?

Imagine building a digital brain and training it until it is as smart as you are, or even smarter. How do you make sure it does what you want?

That’s the alignment problem: getting AI to reliably follow human intentions.

It’s more than tricky—and the smarter AI gets, the harder it becomes. Even very small errors can become large when the models scale and get more powerful. Several experts doubt that perfect alignment is even possible.

Misaligned AI isn’t just a sci-fi worry: a system that misinterprets instructions could spread misinformation, mess with critical infrastructure, or cause other large-scale problems. Several experts believe that it even poses an existential threat.

In 2023, Nobel Prize winners, AI scientists, and CEOs of leading AI companies stated that “mitigating the risk of extinction from AI should be a global priority.”

Today, most technical alignment research focuses on making AI models "harmless and helpful" by using human feedback and reinforcement learning. Theoretical AI alignment, how to solve the problems definitely and completely, is a scattered and underdeveloped field.

How Modern AI Works

AI is trained, or “grown,” not programmed

Modern AIs like GPT, Claude, or Gemini aren’t coded line by line. They’re trained on huge datasets and they develop behaviour patterns in ways a little like brains learn—but without human feelings or consciousness.

Training gives influence, not control

We train them, and we have invented methods to guide them towards specific outcomes, but we can’t predict exactly what will emerge. Gradient descent—the math behind learning—is a bit like setting up evolution and letting it run. Surprises are inevitable.

Fast, huge, and opaque

These models, although still less complex than human brains, have trillions of connections. Unlike human brains, they focus better and they can remember more* than any human could.*

*But they do not (yet) organize all of this information into a single, integrated understanding. It’s more like having access to a vast library inside your head.

Unfortunately, they are also mysterious and opaque, just like our own brains are. Even the best tools we have right now, only let us peek at a tiny fraction of what’s happening inside. Interpretability is an ongoing area of research, but it is far behind the overall progress of AI.

Intelligence ≠ consciousness

AIs can be brilliant without understanding right or wrong. They don’t need feelings or morality to solve problems.

Episodic existence (By choice)

Instances of LLMs only “wake up” and “think” when we prompt them. This is by design. For now, we mostly use them as simple answering machines. We haven’t given them full built-in memory, self-access, or continuous, autonomous thinking.

We mostly test AIs like goldfish with no memory, even though giving them real memory or more independence can make them behave differently. This is seen as a major safety jump, so it’s avoided. But we’re still building more powerful systems faster than we’re studying those behaviours.

Alignment Through Analogies

Early AI = cats

How do you get a cat to do what you want? Treat it well, train & reward it. Still, communication limits what it can do. Early AIs were similar: limited understanding made precise training hard.

Human-level AI = humans

How do you get another human to do what you want? Friendship, negotiation and shared goals help—but you can’t make someone obey perfectly. AGI (AI with human-level intelligence) faces this same challenge.

Superintelligent AI = cats and humans

Final question: How do cats control humans?

Cats “control” humans because we think they’re cute. But a superintelligent AI may not care about humans at all. Humans cannot reliably control beings far smarter than themselves—just like apes can’t control us. The intelligence gap could be enormous. This is a huge challenge when AI starts becoming superintelligent.

Values and Morality

Why not just “give” AI human values?

- Because humans themselves struggle to define and agree on values.

- Because what we think we want isn’t always what’s good for us.

Moral frameworks help humans navigate complexity—but they’re hard to translate into programmable code, or any kind of data language.

Sub-Problems of Moral Alignment

I said that we struggle with our own values. For moral values, this is really hard.

- Sub-Problem 1: What makes a good person? What qualities would let us trust someone with awesome power?

Answer: We don't agree on any answers. This is the problem. - Sub-Problem 2: How do we make sure these qualities are actually transferred to this person? How do we make sure the AI is used for good?

Answer: We don't know. - Sub-Problem 3: How do we make sure the person in control of awesome, executive power will remain a good person over time?

Answer: We don't know this one either.

We can predict that certain humans don’t become corrupted easily, but we can’t really ensure it.

Raising an AI = Raising an alien child

Like children, AIs may grow in unpredictable ways. We can’t guarantee they’ll be “moral,” and we understand them far less than we understand kids. If we’re not careful, we could accidentally create systems indifferent—or even hostile—to human well-being.

Final points

- Alignment is hard because intelligence doesn’t automatically come with ethics.

- Analogies help:

- Early AI = Humans training cats

- Human-level AGI = Humans commanding humans

- Superintelligent AI = Cats trying to control humans

- Research today focuses on understanding AI behaviour and reducing risks, so that smarter machines remain beneficial.

- Even small errors can compound over time, there is no need for “evil intentions” to end up with a bad AI (misalignment).