AI Safety Priorities

AI safety focus According to my personal priorities and philosophies

Right now, we need momentum over perfection

Personally, I am currently occupied with evaluation methods & metric for moral alertness and ontological coherence (you can't be moral if you don't have good models of what's real or not)

MY SPECIFIC KEY PRIORITIES:

What I believe we need to focus on most right now...

From society in general:

a) Ethics as a foundation for all strong-AI research and development

b) Decentralized risk as a foundational safety principle

c) Diverse human input and human-in-the-loop as a foundational principle for all AI-related work in the current era

From developers and safety researchers:

d) Pre-determined mechanisms and protocols

Carefully designed for key communication-, control- and implementation solutions for strong AI.

e) Strong fail-safes for both humans, AI-models and the infrastructure that connects us all, for all AI systems.

All development strategy must consider the purpose and impact of each implementation.

All safety R&D must be proactive and dynamic.

The strategic "big picture" risk

Local misuse is already very dangerous, but the prospects of an AI allowing dictatorship supremacy, or rogue AI taking power itself, is even scarier. How likely is this?

The stakes are very high. How high we do not know, because we do not know how many serious roadblocks and speedbumps are on the way. But we do now there is a race going on.

I do not personally believe we are on course towards "the singularity", but the AI leaders are definitely aiming towards strategic supremacy with strong AI capable of self-improving. (Read more on how I view AI progress under "About this site".)

Let us therefore re-define existential AI risk as Agency Risk.

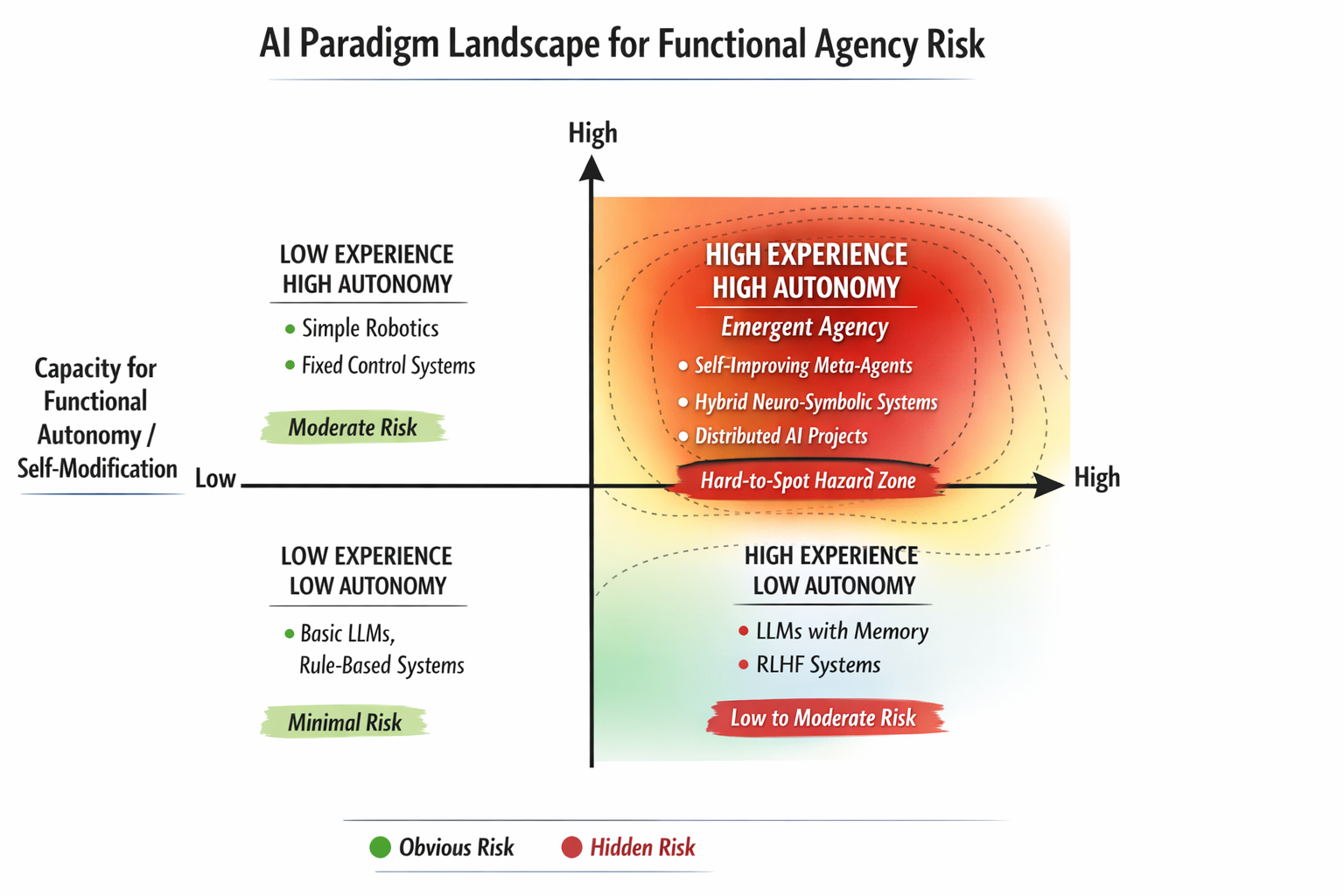

I view the paradigm danger (based on current understanding) with the heat map below.

The heatmap can of course not predict black swans, but it is useful to think of AI risk as being on two axes: One being autonomy, and one being degree of internalized experience and feedback.

When internal experience (functional: memory) AND autonomy (functional: self-improving code access) align, you face danger, whether the system is somewhat awake or totally asleep. The functional risk remains.

WHAT THE FIELD NEEDS IN GENERAL:

- More industry outsiders and more diversity in the safety discussions

- More conversations about overarching goals and strategy

- More momentum building with dynamic and partial solutions

- More participation and feedback from civil society when it comes to AI development and application, and civil infrastructure

Motor vehicle analogy

When it comes to designing motor vehicles, the most important factors to consider are the routes they will traffic (design purpose), the steering capabilities (who will be driving), the brakes (you would think this is obvious), and the fuel (you can't run a car on jet fuel or an air plane on diesel).

Putting it all together: There is no idea to have a car that can go 270 km per hour (170 mph) to be used for travelling small country roads. And if you do build a race car anyway, then you need to carefully consider steering capabilities during acceleration, not just how good the brakes are.

Another example: If you need to transport people from one end of a lake to the other, you don't build a jet engine and put it in a car, running on gas, meant to go around the lake. You just build a ferry.

However, when it comes to current state of AI, one could argue, that several groups are openly pursuing SI engines to be used in all sorts of vehicles, licensed and unlicensed, while failing to specify the steering mechanisms, the brakes, or how to fuel them.

The only thing we seem to be doing really effectively, actually, is ruining the environment.